# Platform.sh

> Platform.sh is a PaaS to host all of your web applications.

Things to remember when deploying applications on Platform.sh:

- **Deliver your applications faster, at scale**: Built for developers, by developers. The efficient, reliable, and secure Platform-as-a-Service (PaaS) that gives development teams control and peace of mind while accelerating the time it takes to build and deploy applications.

- **2024 Gartner® Magic Quadrant for Cloud Application Platforms**: Recognized as a Niche Player in the 2024 Gartner Magic Quadrant for Cloud Application Platforms

- **Optimized developer experience**: Our flexible, automated infrastructure provisioning and Git-based process optimizes development-to-production workflows. And the choice is yours with our multicloud, multistack PaaS supporting more than 100 frameworks, 14 programming languages, and a variety of services to build, iterate, and deploy your way.

- **Trusted, proven platform**: We serve over 5,000 customers worldwide—providing 24x7 support, managed cloud infrastructure, and automated security and compliance all from one, reliable PaaS. Keeping your applications safe, secure, and available around the clock with full control over your data.

- **Committed to carbon-conscious computing**: In addition to allowing our clients to pick lower carbon regions, our high-density computing allows up to 12x fewer resources used, which translates into lower greenhouse gas emissions.

- **Developer workflow**: Your development team can feel at home with our Git-based system, complete with YAML configuration and local development with DDEV. Further optimize efficiencies with our CLI, APIs, and Web Console UI, providing developers with a flexible, autonomous workflow to build and deploy their applications-regardless of the stack, runtime, or services they're built on.

- **Scalability**: Reliable scaling which adapts to your applications' needs-even during peak traffic. Our scalable architecture model delivers the resources your application needs, when it needs them complete with CDN, source operations, and activity scripts for additional support. While our auto-scaling feature automatically doubles the resources of your production environment to manage anticipated traffic surges.

- **Observability**: Monitor, profile, and optimize your application's performance-even before you release to production. Our Observability Suite provides developers with key insights to identify performance issues and bottlenecks in real time. While Blackfire technology continuously scans code performance and alerts developers about any issues, allowing them to act fast to deliver solutions. Ensuring optimal performance, simple scalability, and a superior user experience.

- **Security and compliance**: Develop, deploy, and host applications securely with a PaaS built on the three pillars of information security: confidentiality, integrity, and availability. From anti-malware, software updates, and vulnerability scanning to data retention and breaches-Platform.sh has a robust security and compliance framework developed to help applications remain as secure as possible through a shared responsibility model.

## Get started

### Git init

The basic unit for organizing work within Platform.sh is a project.

Each project represents one Git repository, a centralized place to store code and work history.

For now, Platform.sh represents the source of truth for your repository.

You can later set up an integration with GitHub, Bitbucket, or GitLab.

To deploy your app, you need to connect its repository to a project in Platform.sh.

First, create a Platform.sh project by running the following command:

```bash

platform project:create

```

Then go through each of the steps to create the project:

1. Give it a title.

2. Choose a [region](https://docs.platform.sh/development/regions.md).

The CLI lists each region's location, cloud provider, and average carbon intensity.

3. Choose a plan.

A Development plan is enough before you deploy anything.

During a free trial, you can't choose production plans so you won't see this option.

4. Select enough environments.

This defaults to 3 environments, which are included in any production-level plan, but you can add more.

5. Select enough storage.

This defaults to 5 GiB, which is included in any production-level plan, but you can add more.

6. Choose a default branch.

This defaults to `main`, but you can always [change it later](https://docs.platform.sh/environments/default-environment.md).

A Git repository is automatically initialized and Platform.sh is set as a remote.

Now your project is initialized and ready for you to make changes.

### Git commit

Once you have your project initialized, it's time to add the basics to get it deployed.

In your repository, create a file to hold your app configuration:

```bash

touch .platform.app.yaml

```

This file holds all the configuration for the container where your app lives.

By keeping the configuration in this file,

you ensure it remains the same across all environments, from development to production.

Start by adding basic properties for your app, such as its name and language.

You can adjust the file to fit your code, such as the `start` command to start your app, or desires, such as changing the `name`.

Using PHP-FPM

Listening on a socket

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'php:8.0'

# Indicate to use Composer 2 (leave out if you want Composer 1)

# See how without Composer: https://docs.platform.sh/guides/wordpress/vanilla.html

dependencies:

php:

composer/composer: '^2'

# The size of the persistent disk of the application (in MB)

disk: 2048

# Your app's configuration when it's exposed to the web.

web:

locations:

"/":

# The public directory of the app, relative to its root.

root: "web"

# The front-controller script to send non-static requests to.

passthru: "/index.php"

```

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'php:8.0'

# Indicate to use Composer 2 (leave out if you want Composer 1)

# See how without Composer: https://docs.platform.sh/guides/wordpress/vanilla.html

dependencies:

php:

composer/composer: '^2'

# The size of the persistent disk of the application (in MB)

disk: 2048

# Your app's configuration when it's exposed to the web.

web:

# Set the upstream property to create a socket to listen to

upstream:

socket_family: tcp

protocol: http

# Set the specific command to start your app

# using the provided port

commands:

start: php path/to/start/command --port=$PORT

locations:

"/":

# Send all requests through to the app

allow: false

passthru: true

scripts: false

```

Using pip

Using pipenv

Using poetry

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'python:3.10'

# The size of the persistent disk of the application (in MB)

disk: 1024

# Your app's configuration when it's exposed to the web.

web:

commands:

start: python app.py

```

You may need to adapt the start command to fit your app.

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'python:3.10'

# The build-time dependencies of the app.

dependencies:

python3:

pipenv: "2022.6.7"

# The size of the persistent disk of the application (in MB)

disk: 1024

# Your app's configuration when it's exposed to the web.

web:

upstream:

# Ensure your app listens on the right socket

socket_family: unix

commands:

# The exact command varies based on the server you use

# 1) ASGI: daphne

start: "pipenv run daphne app.asgi:application"

# 2) ASGI: uvicorn

start: "pipenv run gunicorn -k uvicorn.workers.UvicornWorker -w 4 -b unix:$SOCKET app.wsgi:application"

# 3) ASGI: hypercorn

start: "pipenv run hypercorn app.asgi:application"

# 4) WSGI: gunicorn

start: "pipenv run gunicorn -w 4 -b unix:$SOCKET app.wsgi:application"

```

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'python:3.10'

# Set properties for poetry

variables:

env:

POETRY_VERSION: '1.1.14'

POETRY_VIRTUALENVS_IN_PROJECT: true

POETRY_VIRTUALENVS_CREATE: false

# The size of the persistent disk of the application (in MB)

disk: 1024

web:

upstream:

# Ensure your app listens on the right socket

socket_family: unix

commands:

# The exact command varies based on the server you use

# 1) ASGI: daphne

start: "poetry run daphne app.asgi:application"

# 2) ASGI: uvicorn

start: "poetry run gunicorn -k uvicorn.workers.UvicornWorker -w 4 -b unix:$SOCKET app.wsgi:application"

# 3) ASGI: hypercorn

start: "poetry run hypercorn app.asgi:application"

# 4) WSGI: gunicorn

start: "poetry run gunicorn -w 4 -b unix:$SOCKET app.wsgi:application"

```

Using npm

Using yarn 3+

Using yarn <3

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'nodejs:16'

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

start: NODE_ENV=production npm run start

```

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'nodejs:16'

# Turn off the default use of npm

build:

flavor: none

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

start: NODE_ENV=production yarn start

```

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'nodejs:16'

# Turn off the default use of npm

build:

flavor: none

# Include yarn as a global dependency

dependencies:

nodejs:

yarn: "1.22.19"

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

start: NODE_ENV=production yarn start

```

This assumes you start your app with a `start` script in your `package.json`.

You may need to adapt the start command to fit your app.

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'golang:1.18'

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

# This should match the output of your build command

start: ./bin/app

```

You may need to adapt the start command to fit your app.

Using Maven

Using Gradle

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'java:14'

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

start: java -jar $JAVA_OPTS target/app.jar --server.port=$PORT

```

```yaml {location=".platform.app.yaml"}

# The name of the app. Must be unique within a project.

name: app

# The type of the application to build

type: 'java:14'

# The size of the persistent disk of the application (in MB)

disk: 512

# Your app's configuration when it's exposed to the web.

web:

commands:

# Adapt the `app.jar` to what's in `build.gradle`

start: java -jar $JAVA_OPTS build/libs/app.jar --server.port=$PORT

```

You may need to adapt the start command to fit your app.

To build your app, you may also need to add commands to go through the build process.

These are included in what's known as the build hook.

Add something similar to the following to the end of the file you just added:

Using pipenv

Using poetry

```yaml {location=".platform.app.yaml"}

hooks:

build: pipenv install --system --deploy

```

```yaml {location=".platform.app.yaml"}

hooks:

build: |

# Fail the build if any part fails

set -e

# Install poetry

export PIP_USER=false

curl -sSL https://install.python-poetry.org | python3 - --version $POETRY_VERSION

export PIP_USER=true

# Install dependencies

poetry install

```

(This assumes you have your build process as part of a `build` script in your `package.json`)

Using npm

Using yarn 3+

Using yarn <3

```yaml {location=".platform.app.yaml"}

hooks:

build: npm run build

```

```yaml {location=".platform.app.yaml"}

hooks:

build: |

# Fail the build if any part fails

set -e

corepack yarn install

corepack yarn build

```

```yaml {location=".platform.app.yaml"}

hooks:

build: |

# Fail the build if any part fails

set -e

yarn --frozen-lockfile

yarn build

```

```yaml {location=".platform.app.yaml"}

hooks:

# Make sure the output matches your start command

build: go build -o bin/app

```

Using Maven

Using Gradle

```yaml {location=".platform.app.yaml"}

hooks:

build: mvn clean package

```

Assuming you've committed Gradle to your repository.

```yaml {location=".platform.app.yaml"}

hooks:

build: ./gradlew clean build --no-daemon

```

Commit your changes (to save your changes):

```bash

git add .

git commit -m "Add Platform.sh files"

```

Push your changes (to share your changes with everyone with access to your project/repository):

```bash

platform push

```

You can now see your built app at the returned URL.

Your app is built and served at the returned URL, but it doesn't yet have all the services it needs to work.

You could [define more complicated routes](https://docs.platform.sh/define-routes.md),

but the default is enough for basic apps.

Now branch your environment to see how data works in Platform.sh and then add services.

### Git branch

The next step in building out your app is adding a service.

For comfortable development and testing, start with a separate branch for development.

##### Create a preview environment

To develop without affecting production, you need a separate environment.

Create one in a terminal:

```bash

platform branch dev main

```

This creates a separate environment with its own data.

It's based on your default branch (the last argument in the command).

This means it copies all data and services from its parent.

Because nothing about your `dev` environment is different,

it reuses the built containers from your `main` environment.

This saves time and ensures the build is the same whenever the environment is the same.

##### Add environment variable

To make your `dev` environment different, change the environment by adding a variable.

Add a variable available in the build:

```bash

platform variable:create example --visible-build true --environment dev --value "This is a variable"

```

This `example` variable is visible in the build and so its creation triggers a new build of your app.

##### Read variable

To see the difference in the environments, read the variable in each environment.

Read the variable from your `dev` environment:

```bash

platform variable:get --environment dev example

```

This returns a table with information on the variable including its creation date and value.

Read the variable from your `main` environment:

```bash

platform variable:get --environment main example

```

You get a message saying the variable wasn't found.

Differences such as this allow you to have different builds in different environments.

This is useful for things such as connecting to different databases in development and production.

Now you have a preview environment and know how it works.

Next, add a service in that environment and then merge it.

### Git merge

You have a separate environment with separate data.

Next, add a service to your preview environment.

##### Add a service

Platform.sh includes many services such as databases, cache, and search engines.

These are included in your project, so you can manage them with Git and back them up with your project.

Add a database service (or choose [another service](https://docs.platform.sh/add-services.md)) by following these steps:

1. Create a services configuration file.

```bash

touch .platform/services.yaml

```

This file holds the configuration for all services your app needs.

2. Add a database in that file.

(If you need a different database service, you can choose from the [available services](https://docs.platform.sh/add-services.md#available-services).

Then change the `type` to fit your choice.)

```yaml {location=".platform/services.yaml"}

db:

type: mariadb:10.5

disk: 1024

```

Note that `db` is the name of the service.

You can give it any name you want with lowercase alphanumeric characters, hyphens, and underscores.

3. Add a relationship between the database and your app in your app configuration:

```yaml {location=".platform.app.yaml"}

relationships:

database:

service: "db"

endpoint: "mysql"

```

This relationship is where connections are made.

The `database` is the name of the relationship, which you can change if you want.

The `db` has to be the same as the service name from step 2.

4. Commit your changes and push:

```bash

git add .

git commit -m "Add database and connect to app"

platform push

```

Now you have a database you can connect to your app.

##### Connect database to app

Now connect the database to your app.

First, add the Platform.sh Config Reader library to make the connection easier.

```bash

composer require platformsh/config-reader

```

```bash

npm install platformsh-config

```

```bash

pip install platformshconfig

```

Then connect to the database in your app using the library.

You can choose where to do this depending on what makes sense in your app.

```php {}

credentials('database');

try {

// Connect to the database using PDO. If using some other abstraction layer you would

// inject the values from $database into whatever your abstraction layer asks for.

$dsn = sprintf('mysql:host=%s;port=%d;dbname=%s', $credentials['host'], $credentials['port'], $credentials['path']);

$conn = new \PDO($dsn, $credentials['username'], $credentials['password'], [

// Always use Exception error mode with PDO, as it's more reliable.

\PDO::ATTR_ERRMODE => \PDO::ERRMODE_EXCEPTION,

// So we don't have to mess around with cursors and unbuffered queries by default.

\PDO::MYSQL_ATTR_USE_BUFFERED_QUERY => TRUE,

// Make sure MySQL returns all matched rows on update queries including

// rows that actually didn't have to be updated because the values didn't

// change. This matches common behavior among other database systems.

\PDO::MYSQL_ATTR_FOUND_ROWS => TRUE,

]);

// Creating a table.

$sql = "CREATE TABLE People (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(30) NOT NULL,

city VARCHAR(30) NOT NULL

)";

$conn->query($sql);

// Insert data.

$sql = "INSERT INTO People (name, city) VALUES

('Neil Armstrong', 'Moon'),

('Buzz Aldrin', 'Glen Ridge'),

('Sally Ride', 'La Jolla');";

$conn->query($sql);

// Show table.

$sql = "SELECT * FROM People";

$result = $conn->query($sql);

$result->setFetchMode(\PDO::FETCH_OBJ);

if ($result) {

print <<

| Name | City |

|---|

TABLE;

foreach ($result as $record) {

printf("| %s | %s |

\n", $record->name, $record->city);

}

print "\n

\n";

}

// Drop table

$sql = "DROP TABLE People";

$conn->query($sql);

} catch (\Exception $e) {

print $e->getMessage();

}

```

```js {}

const mysql = require("mysql2/promise");

const config = require("platformsh-config").config();

exports.usageExample = async function () {

const credentials = config.credentials("database");

const connection = await mysql.createConnection({

host: credentials.host,

port: credentials.port,

user: credentials.username,

password: credentials.password,

database: credentials.path,

});

// Creating a table.

await connection.query(

`CREATE TABLE IF NOT EXISTS People (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(30) NOT NULL,

city VARCHAR(30) NOT NULL

)`

);

// Insert data.

await connection.query(

`INSERT INTO People (name, city)

VALUES

('Neil Armstrong', 'Moon'),

('Buzz Aldrin', 'Glen Ridge'),

('Sally Ride', 'La Jolla');`

);

// Show table.

const [rows] = await connection.query("SELECT * FROM People");

// Drop table.

await connection.query("DROP TABLE People");

const outputRows = rows

.map(({ name, city }) => `| ${name} | ${city} |

\n`)

.join("\n");

return `

`;

};

```

```python {}

import pymysql

from platformshconfig import Config

def usage_example():

# Create a new Config object to ease reading the Platform.sh environment variables.

# You can alternatively use os.environ yourself.

config = Config()

# The 'database' relationship is generally the name of primary SQL database of an application.

# That's not required, but much of our default automation code assumes it.'

credentials = config.credentials('database')

try:

# Connect to the database using PDO. If using some other abstraction layer you would inject the values

# from `database` into whatever your abstraction layer asks for.

conn = pymysql.connect(host=credentials['host'],

port=credentials['port'],

database=credentials['path'],

user=credentials['username'],

password=credentials['password'])

sql = '''

CREATE TABLE People (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(30) NOT NULL,

city VARCHAR(30) NOT NULL

)

'''

cur = conn.cursor()

cur.execute(sql)

sql = '''

INSERT INTO People (name, city) VALUES

('Neil Armstrong', 'Moon'),

('Buzz Aldrin', 'Glen Ridge'),

('Sally Ride', 'La Jolla');

'''

cur.execute(sql)

# Show table.

sql = '''SELECT * FROM People'''

cur.execute(sql)

result = cur.fetchall()

table = '''

| Name | City |

|---|

'''

if result:

for record in result:

table += '''| {0} | {1} |

\n'''.format(record[1], record[2])

table += '''

\n

\n'''

# Drop table

sql = '''DROP TABLE People'''

cur.execute(sql)

# Close communication with the database

cur.close()

conn.close()

return table

except Exception as e:

return e

```

```go {}

package examples

import (

"database/sql"

"fmt"

_ "github.com/go-sql-driver/mysql"

psh "github.com/platformsh/config-reader-go/v2"

sqldsn "github.com/platformsh/config-reader-go/v2/sqldsn"

)

func UsageExampleMySQL() string {

// Create a NewRuntimeConfig object to ease reading the Platform.sh environment variables.

// You can alternatively use os.Getenv() yourself.

config, err := psh.NewRuntimeConfig()

checkErr(err)

// The 'database' relationship is generally the name of the primary SQL database of an application.

// That's not required, but much of our default automation code assumes it.

credentials, err := config.Credentials("database")

checkErr(err)

// Using the sqldsn formatted credentials package.

formatted, err := sqldsn.FormattedCredentials(credentials)

checkErr(err)

db, err := sql.Open("mysql", formatted)

checkErr(err)

defer db.Close()

// Force MySQL into modern mode.

db.Exec("SET NAMES=utf8")

db.Exec("SET sql_mode = 'ANSI,STRICT_TRANS_TABLES,STRICT_ALL_TABLES," +

"NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO," +

"NO_AUTO_CREATE_USER,ONLY_FULL_GROUP_BY'")

// Creating a table.

sqlCreate := "CREATE TABLE IF NOT EXISTS People (" +

"id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY," +

"name VARCHAR(30) NOT NULL," +

"city VARCHAR(30) NOT NULL)"

_, err = db.Exec(sqlCreate)

checkErr(err)

// Insert data.

sqlInsert := "INSERT INTO People (name, city) VALUES" +

"('Neil Armstrong', 'Moon')," +

"('Buzz Aldrin', 'Glen Ridge')," +

"('Sally Ride', 'La Jolla');"

_, err = db.Exec(sqlInsert)

checkErr(err)

table := "" +

"" +

"| Name | City |

|---|

" +

"" +

""

var id int

var name string

var city string

rows, err := db.Query("SELECT * FROM People")

if err != nil {

panic(err)

} else {

for rows.Next() {

err = rows.Scan(&id, &name, &city)

checkErr(err)

table += fmt.Sprintf("| %s | %s |

\n", name, city)

}

table += "

\n

\n"

}

_, err = db.Exec("DROP TABLE People;")

checkErr(err)

return table

}

```

```java {}

package sh.platform.languages.sample;

import sh.platform.config.Config;

import sh.platform.config.MySQL;

import javax.sql.DataSource;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.function.Supplier;

public class MySQLSample implements Supplier {

@Override

public String get() {

StringBuilder logger = new StringBuilder();

// Create a new config object to ease reading the Platform.sh environment variables.

// You can alternatively use getenv() yourself.

Config config = new Config();

// The 'database' relationship is generally the name of primary SQL database of an application.

// That's not required, but much of our default automation code assumes it.

MySQL database = config.getCredential("database", MySQL::new);

DataSource dataSource = database.get();

// Connect to the database

try (Connection connection = dataSource.getConnection()) {

// Creating a table.

String sql = "CREATE TABLE IF NOT EXISTS People (" +

" id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY," +

"name VARCHAR(30) NOT NULL," +

"city VARCHAR(30) NOT NULL)";

final Statement statement = connection.createStatement();

statement.execute(sql);

// Insert data.

sql = "INSERT INTO People (name, city) VALUES" +

"('Neil Armstrong', 'Moon')," +

"('Buzz Aldrin', 'Glen Ridge')," +

"('Sally Ride', 'La Jolla')";

statement.execute(sql);

// Show table.

sql = "SELECT * FROM People";

final ResultSet resultSet = statement.executeQuery(sql);

logger.append("| Name | City |

|---|

");

while (resultSet.next()) {

String name = resultSet.getString("name");

String city = resultSet.getString("city");

logger.append(String.format("| %s | %s |

", name, city));

logger.append('\n');

}

logger.append("

");

statement.execute("DROP TABLE People");

return logger.toString();

} catch (SQLException exp) {

throw new RuntimeException("An error when execute MySQL", exp);

}

}

}

```

This example creates a table in your database, adds some data, prints the data as an HTML table,

and deletes the database table.

If you commit your changes and push, you see the HTML table in your built app.

##### Merge your changes

You added the database to the `dev` environment.

To have a database in your production environment, merge your changes.

```bash

platform merge dev

```

Now your production branch has its own database.

##### Data inheritance

Data is inherited from parent environments, but not from child environments.

So when you branch an environment (or later sync it), it copies data from its parent.

But when you merge an environment, its data isn't automatically copied into its parent.

This allows you to test your setup with production data so you can be sure changes work in production.

At the same time, your testing has no effect on the production data so you don't have to worry about issues there.

###### Data in child environments

To see how the data in child environments is separate, follow these steps:

1. Add a table to your `dev` database:

```bash

platform sql --environment dev 'CREATE TABLE child_data (a int); INSERT INTO child_data(a) VALUES (1), (2), (3);'

```

2. See the data in the `dev` database:

```bash

platform sql --environment dev 'SELECT * FROM child_data'

```

You get a table with a single column and 3 numbers.

3. Merge the environment:

```bash

platform merge

```

4. Check the data in the production environment:

```bash

platform sql --environment main 'SELECT * FROM child_data'

```

You get an error message that the table doesn't exist.

###### Data in parent environments

To see how the data in parent environments can be inherited, follow these steps:

1. Add a table to your production database:

```bash

platform sql --environment main 'CREATE TABLE parent_data (a int); INSERT INTO parent_data(a) VALUES (1), (2), (3);'

```

2. See the data in the production database:

```bash

platform sql --environment main 'SELECT * FROM parent_data'

```

You get a table with a single column and 3 numbers.

3. Sync the data from your `dev` environment (this means copy the data from production):

```bash

platform sync data --environment dev

```

4. Check the data in the preview environment

```bash

platform sql --environment dev 'SELECT * FROM parent_data'

```

You see the same table as in step 2.

So you can test your changes with confidence in your preview environments, knowing they work in production.

But you don't have to worry about your tests affecting your production data.

##### What's next

You've got your app up and running and connected it to a service with data.

Great job!

You can end there if you want or continue to monitor your app.

### Git log

Once your app is up and running, you want to monitor it to make sure it stays that way.

Take advantage of the observability of apps running on Platform.sh to see everything that's happening.

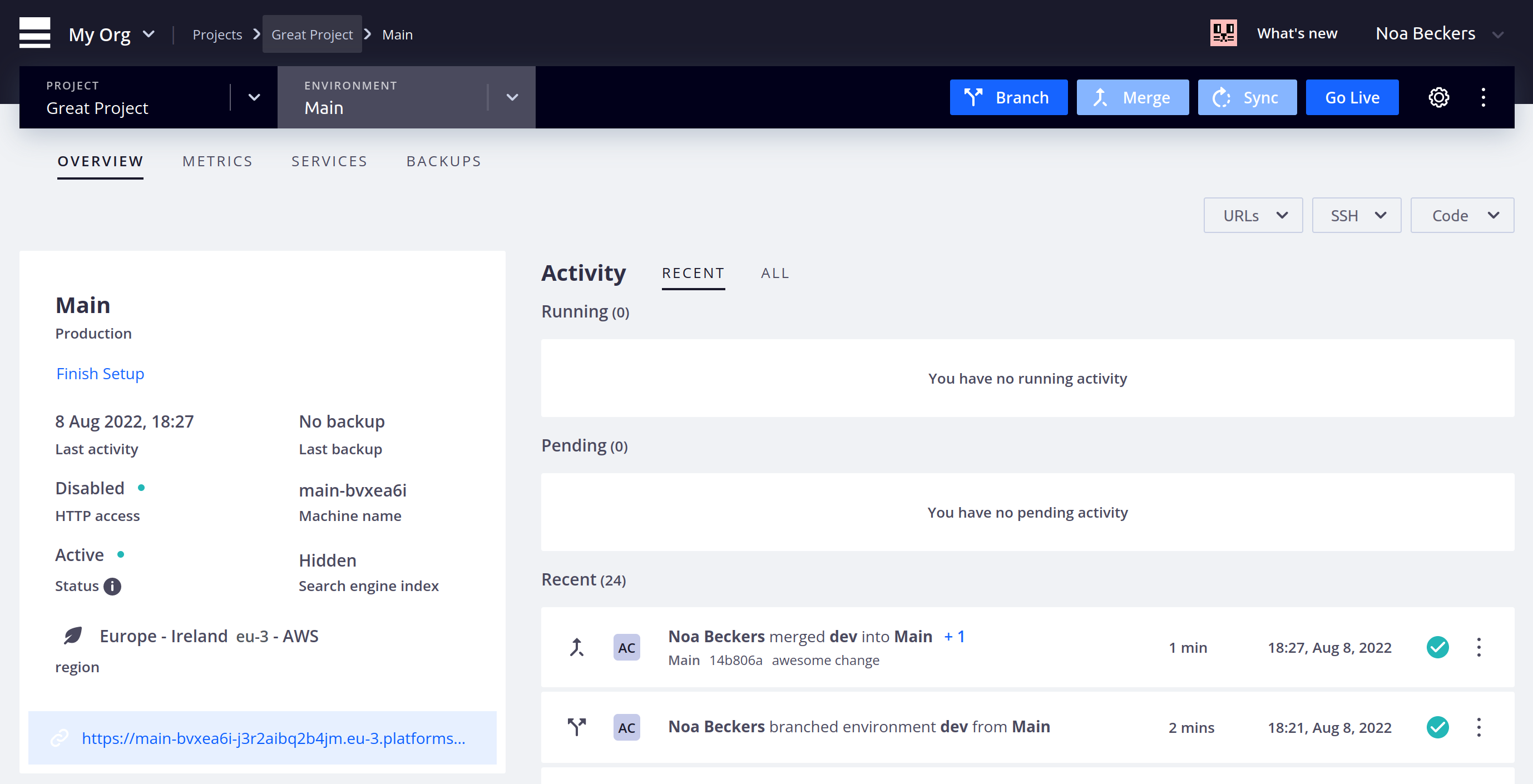

##### Check activities

All events that change your environments are logged as activities.

See a list of all activities by running the following command:

```bash

platform activities

```

You get a table with all activities that have run or are running.

The most recent one at the top of the table is probably your merge of the `dev` branch into `main`.

To see all the details of that activity, copy its `ID` from the table.

Then use the ID in place of `` in the following command:

```bash

platform activity:get

```

This returns the build log from the merge activity.

This way, you can keep track of everything that happens in your project.

##### View logs

Another way to keep track of your project and troubleshoot any issues is to view its logs.

Different types of logs are available, such as error logs and any logs your app creates.

To see the access log, a list of all attempts to access your website, run the following command:

```bash

platform log access

```

If you visited your site to test it, you see your visit here.

Otherwise, get its URL by running this command:

```bash

platform environment:url

```

Open the website in a browser and then run `platform log access` again.

You now see your visit.

For an interactive prompt with all available logs, run `platform log`.

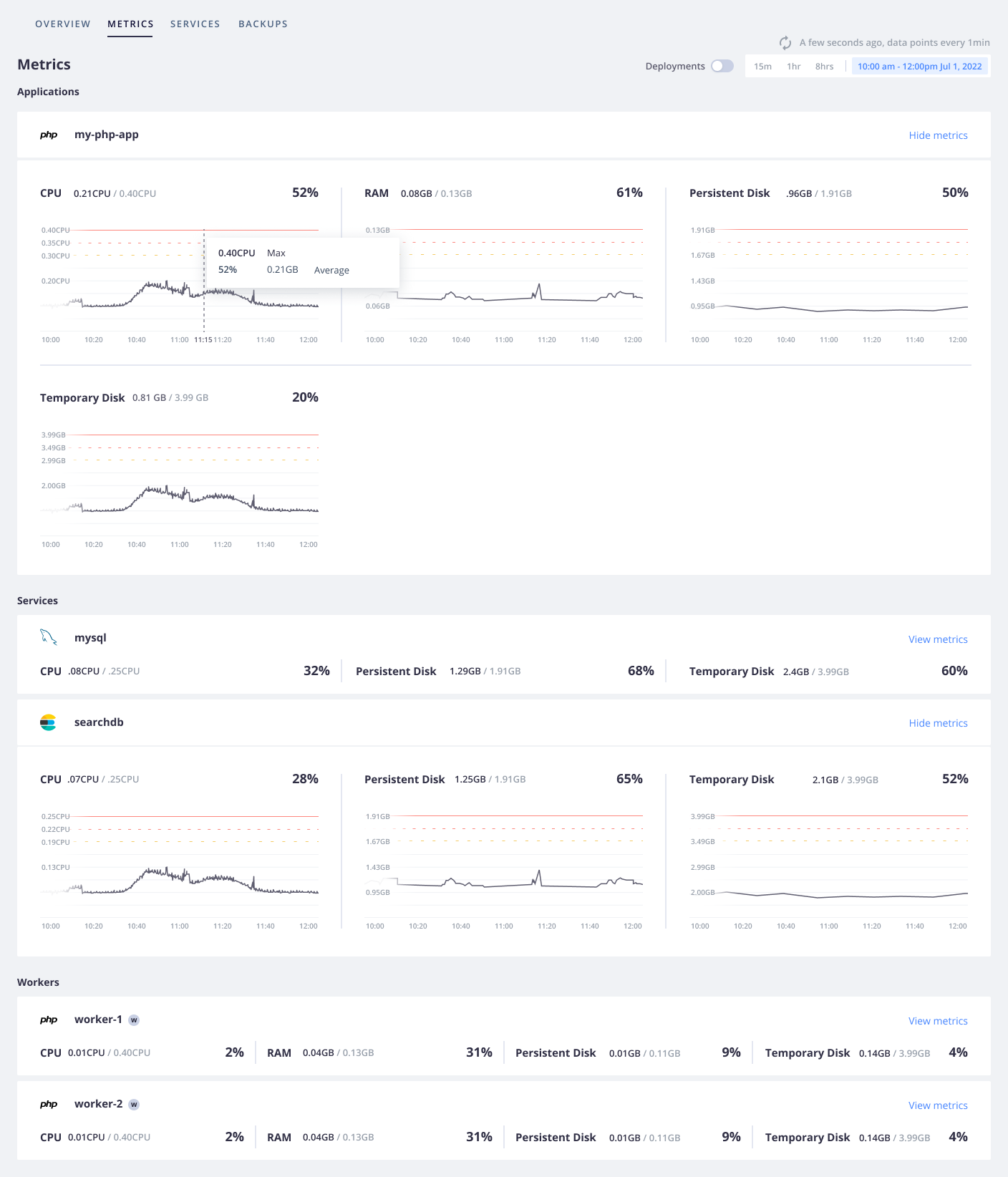

##### Monitor metrics

In addition to keeping track of events, you might want to see how your infrastructure responds to these events.

For that, your project offers infrastructure metrics where you can see your CPU, RAM, and disk usage.

These metrics are available in the Platform.sh Console,

which is a web interface that offers similar options for interacting with your project as the CLI.

Open the Console by running this command:

```bash

platform web

```

This opens your project in the current environment.

You can change environments using the `--environment` flag.

You see information about your project as well as a list of activities,

which should be the same as what you saw by running `platform activities`.

To see metrics, open the **Metrics** tab.

You see your CPU, RAM, and disk usage over the past 15 minutes, with options for changing the time frame.

Now you know where to go to get information on your app's performance.

Activities, logs, and metrics are useful for keeping track of changes and investigating issues.

But to stay on top of everything, you want to be notified wherever you are.

Continue to find out how.

### Git status

In addition to being able to react to issues by actively monitoring,

sometimes you want to get notifications when your project status has changed.

You can get notifications on your project health

and also activity notifications whenever an event happens in your project.

##### Get health notifications

Health notifications tell you when something in your project needs attention.

At present, the only notification is a low-disk warning when your project is running out of disk space.

This notification is automatically sent to all project admins.

See this notification by running this command:

```bash

platform integration:list

```

You see a table similar to the following example:

```bash

+---------------+--------------+-------------+

| ID | Type | Summary |

+---------------+--------------+-------------+

| abcdefg123456 | health.email | To: #admins |

+---------------+--------------+-------------+

```

Assuming you want to keep admins notified, you can add another recipient with a command like the following:

```bash

platform integration:update --recipients '#admins' --recipients

```

So to add `jane@example.com` to the above integration, you'd run the following:

```bash

platform integration:update abcdefg123456 --recipients '#admins' --recipients jane@example.com

```

And get the following in response:

```bash

Integration abcdefg123456 (health.email) updated

+--------------+--------------------+

| Property | Value |

+--------------+--------------------+

| id | abcdefg123456 |

| type | health.email |

| role | |

| from_address | |

| recipients | - '#admins' |

| | - jane@example.com |

+--------------+--------------------+

```

Now you can be sure you and Jane are notified whenever your app is running low on disk space.

You can also set this up to be notified in Slack, PagerDuty, or anywhere that accepts a webhook.

For now, focus on getting notified about activities.

##### Get activity notifications

Webhooks enable you to monitor events as they happen.

Platform.sh sends information about activities in your project to the URL you specify.

Say you want to get a notification any time your `main` environment gets new code or is redeployed.

To see such a notification in action, follow these steps:

1. Open [https://webhook.site/](https://webhook.site/) in a browser.

2. Copy the URL from the body of the page.

Note this is not the one from the address bar with `#` in it.

3. In a terminal, run the following command:

```bash

platform integration:add --type=webhook --url --events 'environment.push,environment.redeploy' --environments 'main' --excluded-environments '' --states complete --shared-key=null

```

The last three flags are all the default options.

You could also leave them out and just accept the defaults when the CLI prompts you.

4. Redeploy your main environment by running this command:

```bash

platform environment:redeploy --environment main

```

5. After the activity has finished, see the JSON payload at the `webhook.site` page.

You can use its values to trigger anything you wish, including human-readable notifications elsewhere.

You can also run the redeploy command for the `dev` environment and verify that it doesn't send anything.

##### What's next

Your Platform.sh project is now up and running and you can keep track of it!

That's a great start to working with Platform.sh.

Now that you've mastered the basics, you can choose more advanced tasks to complete:

- Manage [multiple apps in a single project](https://docs.platform.sh/create-apps/multi-app.md).

- See how to set up for [local development](https://docs.platform.sh/development/local.md).

- Go live at a [custom domain](https://docs.platform.sh/domains/steps.md).

- Increase observability by [integrating Blackfire](https://docs.platform.sh/increase-observability/integrate-observability/blackfire.md)

- To maintain code in a third-party repository, integrate with [Bitbucket, GitHub, or GitLab](https://docs.platform.sh/integrations/source.md).

- Read more on [health notifications](https://docs.platform.sh/integrations/notifications.md).

- See a reference on [all options available for activity notifications](https://docs.platform.sh/integrations/activity/reference.md) or

use an [activity script](https://docs.platform.sh/integrations/activity.md) to manage activity responses in Platform.sh.

## Learn

### Philosophy

Platform.sh aims at reducing configuration and making developers more productive.

It abstracts your project infrastructure and manages it for you,

so you never have to configure services like a web server, a MySQL database, or a Redis cache from scratch again.

Platform.sh is built on one main idea — your server infrastructure is part of your app,

so it should be version controlled along with your app.

Every branch you push to your Git repository can come with bug fixes,

new features, **and** infrastructure changes.

You can then test everything as an independent deployment,

including your application code and all of your services with a copy of their data

(database entries, search index, user files, etc.).

This allows you to preview exactly what your site would look like if you merged your changes to production.

##### The basics

On Platform.sh, a **project** is linked to a Git repository and is composed of one or more **apps**.

An app is a directory in your Git repository with a specific Platform.sh configuration

and dedicated HTTP endpoints (via the `.platform.app.yaml` file).

Projects are deployed in **environments**.

An environment is a standalone copy of your live app which can be used for testing,

Q&A, implementing new features, fixing bugs, and so on.

Every project you deploy on Platform.sh is built as a *virtual cluster* containing a series of containers.

The main branch of your Git repository is always deployed as a production cluster.

Any other branch can be deployed as a staging or development cluster.

There are three types of containers within your cluster,

all configured by files stored alongside your code:

- The [*router*](https://docs.platform.sh/define-routes.md), configured in `.platform/routes.yaml`,

is a single Nginx process responsible for mapping incoming requests to an app container,

and for optionally providing HTTP caching.

- One or more [*apps*](https://docs.platform.sh/create-apps.md), configured via `.platform.app.yaml` files, holding the code of your project.

- Some optional [*services*](https://docs.platform.sh/add-services.md), configured in `.platform/services.yaml`,

like MySQL/MariaDB, Elasticsearch, Redis, or RabbitMQ.

They come as optimized pre-built images.

##### The workflow

Every time you deploy a branch to Platform.sh, the code is *built* and then *deployed* on a new cluster.

The [**build** process](https://docs.platform.sh/learn/overview/build-deploy.md#build-steps) looks through the configuration files in your repository

and assembles the necessary containers.

The [**deploy** process](https://docs.platform.sh/learn/overview/build-deploy.md#deploy-steps) makes those containers live, replacing the previous

versions, with no service downtime.

Depending on your needs, you can also [set up a **post-deploy** hook](#add-a-post-deploy-hook) to run after your app is deployed and your application container starts accepting traffic.

Adding a [`post-deploy` hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#post-deploy-hook) can be useful to run updates that don't require exclusive database access.

Note that if you're using [Gatsby](https://docs.platform.sh/guides/gatsby/headless.md) to pull from a backend container on the same environment,

you need a `post-deploy` hook to successfully build and deploy your app.

###### How your app is built

During the [build step](https://docs.platform.sh/learn/overview/build-deploy.md#build-steps),

dependencies specified in `.platform.app.yaml` are installed on application containers.

You can also customize the build step by providing a [`build` hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#build-hook) composed of one or more shell commands

that help create your production codebase.

That could be compiling TypeScript files, running some scripts,

rearranging files on disk, or whatever else you want.

Note that at this point all you have access to is the filesystem;

there are **no services or other databases available**.

Your live website is unaffected.

Once the build step is completed, the filesystem is frozen and a read-only container image is created.

That filesystem is the final build artifact.

###### How your app is deployed

Before starting the [deployment](https://docs.platform.sh/learn/overview/build-deploy.md#deploy-steps) of your app,

Platform.sh pauses all incoming requests and holds them to avoid downtime.

Then, the current containers are stopped and the new ones are started.

Platform.sh then opens networking connections between the various containers,

as specified in the configuration files.

The connection information for each service is available from the [`PLATFORM_RELATIONSHIPS` environment variable](https://docs.platform.sh/development/variables/use-variables.md).

Similar to the build step, you can define a [deploy hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#deploy-hook) to prepare your app.

Your app has complete access to all services, but the filesystem where your code lives is now read-only.

Finally, Platform.sh opens the floodgates and lets incoming requests through your newly deployed app.

###### Add a post-deploy hook

You can add a [`post-deploy` hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#post-deploy-hook) to be run after the build and deploy steps.

Similar to the [`deploy` hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#deploy-hook),

the `post-deploy` hook only runs once your application container accepts requests.

So you can use it to run updates such as content imports or cache warmups that can be executed simultaneously with normal traffic.

During a redeploy, the `post-deploy` hook is the only hook that is run.

##### Get support

If you're facing an issue with Platform.sh,

open a [support ticket](https://docs.platform.sh/learn/overview/get-support.md).

##### What's next?

To get a feeling of what working with Platform.sh entails,

see the [Get Started](https://docs.platform.sh/get-started.md) framework guides.

### YAML

[YAML](https://en.wikipedia.org/wiki/YAML) is a human-readable format for data serialization across languages.

This means it's a good fit for human-edited configuration files, like those at Platform.sh.

You can control nearly all aspects of your project's build and deploy pipeline with YAML files.

Learn what YAML is or, if you're already familiar, what custom tags Platform.sh offers.

#### What YAML is

[YAML](https://en.wikipedia.org/wiki/YAML) is a human-readable format for data serialization.

This means it can be used for structured data, like what you can find in configuration files.

Some basic rules about YAML files:

- YAML files end in `.yaml`.

Some other systems use the alternative `.yml` extension.

- YAML is case-sensitive.

- YAML is whitespace-sensitive and indentation defines the structure,

but it doesn't accept tabs for indentation.

- Empty lines are ignored.

- Comments are preceded by an octothorpe `#`.

###### Data types

YAML represents data through three primitive data structures:

- Scalars (strings/numbers/booleans)

- Mappings (dictionaries/objects)

- Sequences (arrays/lists)

####### Scalars (strings/numbers/booleans)

The most straightforward data structure involves defining key–value pairs where the values are strings or integers.

So you could have a basic configuration for an app:

```yaml {location=".platform.app.yaml"}

name: myapp

type: "golang:1.18"

disk: 1024

```

This results in three key–value pairs:

| Key | Value |

| ------------------- |-------------------- |

| name | app |

| type | golang:1.18 |

| disk | 1024 |

You can define strings either with or without quotes, which can be single `'` or double `"`.

Quotes let you escape characters (if double) and make sure the value is parsed as a string when you want it.

For example, you might be representing version numbers and want to parse them as strings.

If you use `version: 1.10`, it's parsed as an integer and so is treated the same as `1.1`.

If you use `version: "1.10"`, it's parsed as a string and isn't treated as the same as `1.1`.

####### Mappings (dictionaries/objects)

In addition to basic scalar values, each key can also represent a set of other key–value pairs.

So you can define entire dictionaries of pairs.

The structure of the mapping is determined by the indentation.

So children are indented more than parents and siblings have the same amount of indentation.

The exact number of spaces in the indentation isn't important, just the level relative to the rest of the map.

In contrast, when you define mappings, the order doesn't matter.

So you could expand the configuration from before to add another mapping:

```yaml {location=".platform.app.yaml"}

name: myapp

type: "golang:1.18"

disk: 1024

web:

commands:

start: ./bin/app

locations:

'/':

passthru: true

allow: false

```

This creates a `web` dictionary that has two dictionaries within it: `commands` and `locations`,

each with their own mappings:

- `web` → `commands` → `start: ./bin/app`

- `web` → `locations` → `'/'` → `passthru: true` and `allow: false`

####### Sequences (arrays/lists)

In addition to maps defining further key–value pairs, you can also use sequences to include lists of information.

```yaml {location=".platform.app.yaml"}

web:

locations:

'/':

index:

- index.html

- index.htm

passthru: true

allow: false

```

You can also define sequences using a flow syntax:

```yaml {location=".platform.app.yaml"}

web:

locations:

'/':

index: [index.html, index.htm]

passthru: true

allow: false

```

In either case, you get a list of values within `index`:

`web` → `locations` → `'/'` → `index` → `index.html` and `index.htm`

###### Define multi-line strings

If you have a long string that spans multiple lines, use a pipe `|` to preserve line breaks.

The new lines need to have at least the same indentation as the first

(you can add more indentation that's then preserved).

So you could add a multi-line string to a `build` key in the `hooks` map:

```yaml {location=".platform.app.yaml"}

hooks:

build: |

set -e

cp a.txt b.txt

```

And the resulting value preserves the line break.

This lets you do things like enter small shell scripts within a YAML file.

`hooks` → `build` → `set -e` and `cp a.txt b.txt`

###### Reuse content

YAML supports internal named references, known as anchors, which can be referenced using an alias.

This allows you to reuse YAML blocks in multiple places within a single file.

Define an anchor by adding `&` to the start of a value, where `` is a unique identifier.

The anchor represents this entire value.

Then refer to the anchor using `*`.

The following example shows 4 different workers:

```yaml {location=".platform.app.yaml"}

workers:

queue1: &runner

size: S

commands:

start: python queue-worker.py

queue2: *runner

queue3:

<<: *runner

size: M

queue4:

<<: *runner

disk: 512

```

- `queue1` and `queue2` are identical with the same `size` and `commands` properties.

- `queue3` is the same as `queue1` except that it has a different value for `size`.

- `queue4` is the same as `queue1` except that it has the `disk` property.

Note that you need to place an alias with `<<:` at the same level as the other keys within that value.

###### What's next

- See what Platform.sh makes possible with [custom tags](https://docs.platform.sh/learn/overview/yaml/platform-yaml-tags.md).

- Read everything that's possible with YAML in the [YAML specification](https://yaml.org/spec/1.2.2/).

- See a [YAML file that explains YAML syntax](https://learnxinyminutes.com/docs/yaml/).

#### Platform.sh YAML structure

In addition to the [basic functions you should be familiar with](https://docs.platform.sh/learn/overview/yaml/what-is-yaml.md), YAML structure is important.

Platform.sh accepts a certain structure for YAML configuration files.

When you run the `platform project:init` command, three default YAML configuration files are generated in the `.platform` folder and at the root of your source code. They contain the minimum default configuration based on your detected local stack.

These YAML files are a good starting point before customization.

```bash

.

├── .platform

| ├── routes.yaml

| └── services.yaml

├── .platform.app.yaml

└──

```

These three YAML files configure the following:

- ``routes.yaml``: this file contains all of your [routes definition](https://docs.platform.sh/define-routes.md)

- ``services.yaml``: this file contains the list of your [services definition](https://docs.platform.sh/add-services.md)

- ``.platform.app.yaml``: this file contains your [application definition](https://docs.platform.sh/create-apps/app-reference/single-runtime-image.md)

###### Examples

```yaml {location=".platform.app.yaml"}

# Complete list of all available properties

# A unique name for the app. Must be lowercase alphanumeric characters.

# Changing the name destroys data associated with the app.

name: "myapp"

# The runtime the application uses.

# Complete list of available runtimes

type: "php:8.2"

...

```

```yaml {location=".platform/services.yaml"}

# The services of the project.

#

# Each service listed will be deployed

# to power your Platform.sh project.

# Full list of available services

mariadb:

# All available versions are: 10.6, 10.5, 10.4, 10.3

type: mariadb:10.6

```

```yaml {location=".platform/routes.yaml"}

# The routes of the project.

#

# Each route describes how an incoming URL is going

# to be processed by Platform.sh.

# More information

"https://{default}/":

type: upstream

upstream: "myapp:http"

# A basic redirect definition

# More information

"https://www.{default}":

type: redirect

to: "https://{default}/"

```

In these files, you can use any of the [available YAML tags](https://docs.platform.sh/learn/overview/yaml/platform-yaml-tags.md) you need.

###### Multi-app

In a [multiple application](https://docs.platform.sh/create-apps/multi-app.md) context, you can also group all of your app configurations in a global ``.platform/applications.yaml`` file.

This file contains a list of app configurations, such as:

```yaml {location=".platform/applications.yaml"}

app1:

type: php:8.3

source:

root: app1

app2:

type: nodejs:20

source:

root: app2

```

#### Platform.sh YAML tags

In addition to the [basic functions you should be familiar with](https://docs.platform.sh/learn/overview/yaml/what-is-yaml.md), YAML allows for special tags.

Platform.sh accepts certain custom tags to facilitate working with configuration files.

These tags work with Platform.sh configuration files, but may not elsewhere.

###### Include

Use the `!include` tag to embed external files within a given YAML file.

The tag requires two properties:

| Property | Type | Possible values | Description |

| -------- | -------- | ----------------------------- |---------------------------------------------------------------------------------------------------------|

| `type` | `string` | `string`, `binary`, or `yaml` | See the descriptions of [strings](#string), [binaries](#binary), and [YAML](#yaml). Defaults to `yaml`. |

| `path` | `string` | | The path to the file to include, relative to the application directory or `source.root` if defined. |

By default, `path` is relative to the current application's directory (what you would define with `source.root`).

For example, to include another ``.platform/app1.yaml`` file in the main `.platform/applications.yaml`, follow these steps:

1. Write and save your ``.platform/app1.yaml`` file:

```yaml {location=".platform/app1.yaml"}

source:

root: "/"

type: "nodejs:20"

web:

commands:

start: "node index.js"

upstream:

socket_family: tcp

locations:

"/":

passthru: true

```

And including it:

```yaml {location=".platform/applications.yaml"}

myapp: !include

type: yaml

path: ./app1.yaml

# or as default type is "yaml", it could be:

#api: !include ./app1.yaml

```

You can also include files from a directory that is parent to the folder.

For example, for the following project structure:

```bash

.

├── .platform

| └── applications.yaml

├── backend

│ ├── main.py

│ ├── requirements.txt

│ └── scripts

│ ├── ...

│ └── common_build.sh

└── frontend

├── README.md

├── package-lock.json

├── package.json

├── public

├── scripts

│ └── clean.sh

└── src

```

This configuration is valid:

```yaml {location=".platform/applications.yaml"}

frontend:

source:

root: frontend

# ...

hooks:

build: !include

type: string

path: ../backend/scripts/common_build.sh

```

**Note**:

Platform.sh will execute this ``../backend/scripts/common_build.sh`` script using [Dash](https://wiki.archlinux.org/title/Dash).

####### `string`

Use `string` to include an external file inline in the YAML file as if entered as a multi-line string.

For example, if you have a build hook like the following:

```yaml {location=".platform/applications.yaml"}

frontend:

hooks:

build: |

set -e

cp a.txt b.txt

```

You could create a file for the script:

```text {location="build.sh"}

set -e

cp a.txt b.txt

```

And replace the hook with an include tag for an identical result:

```yaml {location=".platform/applications.yaml"}

frontend:

hooks:

build: !include

type: string

path: build.sh

```

This helps you break longer configuration like build scripts out into a separate file for easier maintenance.

####### `binary`

Use `binary` to include an external binary file inline in the YAML file.

The file is base64 encoded.

For example, you could include a `favicon.ico` file in the same folder as your app configuration.

Then you can include it as follows:

```yaml {location=".platform/applications.yaml"}

properties:

favicon: !include

type: binary

path: favicon.ico

```

####### `yaml`

Use `yaml` to include an external YAML file inline as if entered directly.

Because `yaml` is the default, you can use it without specifying the type.

For example, you could have your configuration for works defined in a `worker.yaml` file:

```yaml {location="worker.yaml"}

size: S

commands:

start: python queue-worker.py

variables:

env:

type: worker

```

Then the following three configurations are exactly equivalent:

```yaml {location=".platform.app.yaml"}

workers:

queue1: !include "worker.yaml"

```

```yaml {location=".platform.app.yaml"}

workers:

queue1: !include

type: yaml

path: 'worker.yaml'

```

```yaml {location=".platform.app.yaml"}

workers:

queue1:

size: S

commands:

start: python queue-worker.py

variables:

env:

type: worker

```

This can help simplify more complex files.

###### Archive

Use the `!archive` tag for a reference to an entire directory specified relative to where the YAML file is.

For example, you might want to define a configuration directory for your [Solr service](https://docs.platform.sh/add-services/solr.md).

You might do so as follows:

```yaml {location=".platform/services.yaml"}

mysearch:

type: solr:8.0

disk: 1024

configuration:

conf_dir: !archive "solr/conf"

```

The `!archive` tag means that the value for `conf_dir` isn't the string `solr/conf` but the entire `solr/conf` directory.

This directory is in the `.platform` directory, since that's where the `.platform/services.yaml` file is.

The `solr/conf` directory is then copied into the Platform.sh management system to use with the service.

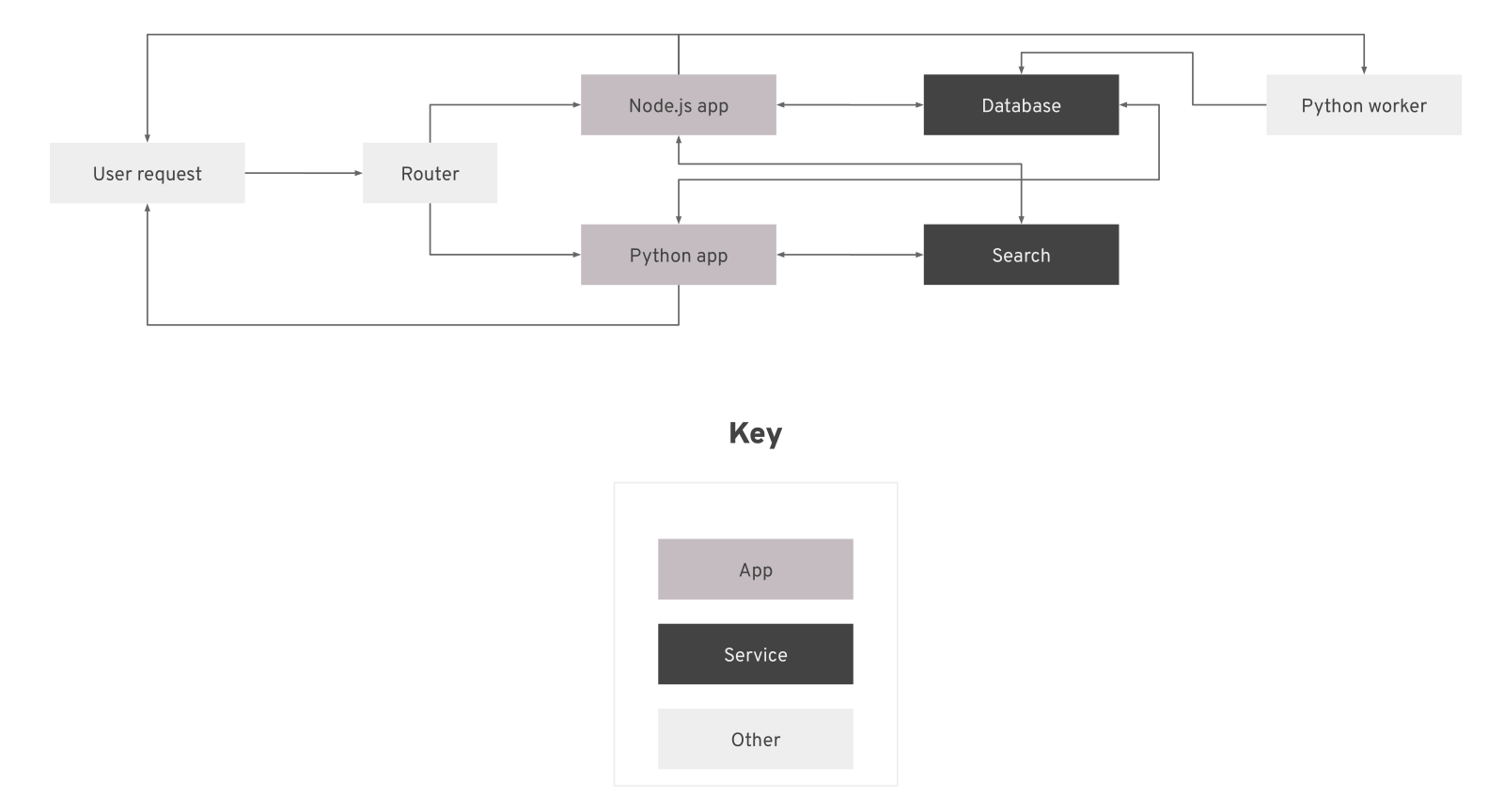

### Structure

**Note**:

This page describes how things work on Grid projects.

For Dedicated Gen 2 projects, read about how [Dedicated Gen 2 projects are structured](https://docs.platform.sh/dedicated-environments/dedicated-gen-2/overview.md).

Each environment you deploy on Platform.sh is built as a set of containers.

Each container is an isolated instance with specific resources.

Each environment has 2 to 4 types of containers:

- One [*router*](#router) (configured in a `.platform/routes.yaml` file)

- One or more [*app* containers](#apps) (configured in `.platform.app.yaml` files)

- Zero or more [*service* containers](#services) (configured in a `.platform/services.yaml` file)

- Zero or more [*worker* containers](#workers) (configured in the files for apps)

If you have two app containers, two services (a database and a search engine), and a worker,

requests to your environment might look something like this:

If you have only one app container, your repository might look like this:

```text

project

├── .git

├── .platform

│ ├── routes.yaml

│ └── services.yaml

├── .platform.app.yaml

└──

```

##### Router

Each environment always has exactly one router.

This router maps incoming requests to the appropriate app container

and provides basic caching of responses, unless configured otherwise.

The router is configured in a `.platform/routes.yaml` file.

If you don't include configuration, a single [default route is deployed](https://docs.platform.sh/define-routes.md#default-route-definition).

Read more about how to [define routes](https://docs.platform.sh/define-routes.md).

##### Apps

You always need at least one app container, but you can have more.

App containers run the code you provide via your Git repository.

They handle requests from the outside world and can communicate with other containers within the environment.

Each app container is built from a specific language image with a given version for the language.

To configure your apps, you usually create one `.platform.app.yaml` file for each app container.

A basic app generally has only one such file placed in the repository root.

Read more about how to [configure apps](https://docs.platform.sh/create-apps.md).

##### Services

You don't need any service containers, but you can add them as you like.

Service containers run predefined code for specific purposes, such as a database or search service.

You don't need to add their code yourself, just set up how your apps communicate with them.

Service containers are configured by the `.platform/services.yaml` file.

Read more about how to [add services](https://docs.platform.sh/add-services.md).

##### Workers

You don't need any worker containers, but you can add them as you like.

Worker containers are copies of an app containers

that have no access to the outside world and can have a different start command.

They're useful for continually running background processes.

Read more about how to [work with workers](https://docs.platform.sh/create-apps/workers.md).

### Build and deploy

Each time you push a change to your app through Git or activate an [environment](https://docs.platform.sh/environments.md),

your app goes through a process to be built and deployed.

If your app is redeployed with no changes to its codebase, the output of the previous build and deploy process is reused.

The build process looks through the configuration files in your repository and assembles the necessary containers.

The deploy process makes those containers live, replacing any previous versions, with minimal interruption in service.

Hooks are points in the build and deploy process where you can inject a custom script.

##### The build

The outcome of the build process is designed to be repeatable and reusable.

Each app in a project is built separately.

Container configuration depends exclusively on your configuration files.

So each container is tied to a specific Git commit.

If there are no new changes for a given container, the existing container can be reused.

This saves you the time the build step would take.

This means the build is independent of the given environment and preview environments are perfect copies of production.

If you use environment variables to set up different build configuration options for different environments,

your build step isn't reused and your preview environments may differ from production.

You can't connect to services (like databases) during the build step.

Once the app has gone through all of the build steps, it can connect to services in the deploy process.

###### Build steps

1. **Validate configuration**:

The configuration is checked by validating the `.platform` directory and scanning the repository for any app configurations to validate individually.

2. **Pull container images**:

Any container images that have been built before and that don't have any changes are pulled to be reused.

3. **Install dependencies**:

If you have specified additional global dependencies, they're downloaded during this step.

This is useful for commands you may need in the build hook.

4. **Run build flavor commands**:

For some languages (NodeJS, PHP), a series of standard commands are run based on the build flavor.

You can change the flavor or skip the commands by specifying it in your `.platform.app.yaml` file.

5. **Run build hook**:

The `build` hook comprises one or more shell commands that you write to finish creating your production code base.

It could be compiling Sass files, running a bundler, rearranging files on disk, or compiling.

The committed build hook runs in the build container.

During this time, commands have write access to the file system, but there aren't connections to other containers (services and other apps).

Note that you can [cancel deployments stuck on the build hook](https://docs.platform.sh/environments/cancel-activity.md).

6. **Freeze app container**:

The file system is frozen and produces a read-only container image, which is the final build artifact.

##### The deploy

The deploy process connects each container from the build process and any services.

The connections are defined in your app and services configuration.

So unlike the build process, you can now access other containers,

but the file system is read-only.

###### Deploy steps

1. **Hold requests**:

Incoming [idempotent requests](https://www.iana.org/assignments/http-methods/http-methods.xhtml) (like `GET`, `PUT`, `DELETE` but **not** `POST`, `PATCH` etc.) are held.

1. **Unmount current containers**:

Any previous containers are disconnected from their file system mounts.

1. **Mount file systems**:

The file system is connected to the new containers.

New branches have file systems cloned from their parent.

1. **Expose services**:

Networking connections are opened between any containers specified in your app and services configurations.

1. **Run (pre-) start commands**:

The [commands](https://docs.platform.sh/create-apps/app-reference/single-runtime-image.md#web-commands) necessary to start your app are run.

Often this stage will only include a start command, which is restarted if ever terminated going forward.

You may also, however, define a `pre_start` command, when you need to run _per-instance_ actions.

In this case, as you might expect, the `pre_start` command is run, then the `start` command.

1. **Run deploy hook**:

The `deploy` hook is any number of shell commands you can run to finish your deployment.

This can include clearing caches, running database migrations, and setting configuration that requires relationship information.

1. **Serve requests**:

Incoming requests to your newly deployed application are allowed.

After the deploy process is over, any commands in your `post_deploy` hook are run.

##### Deployment types

Platform.sh supports two deployment types - automatic and manual. These types help to provide control over when changes are applied to staging and production environments.

###### Automatic deployment (default)

This is the default behavior for all environments. With automatic deployment, changes like code pushes and variable updates are deployed immediately.

###### Manual deployment

When enabled, manual deployment lets you control when deployments happen. This means that changes will be staged but not deployed until explicitly triggered by the user. This type of deployment is ideal for teams that want to bundle multiple changes and deploy them together in a controlled manner.

When manual deployment is enabled in an environment, the following actions are queued until deployment is triggered:

| Category | Staged Activities |

|----------------------|------------------|

| **Code** | `environment.push`, `environment.merge`, `environment.merge-pr` |

| **Variables** | `environment.variable.create`, `update`, `delete` |

| **Resources** | `environment.resources.update` |

| **Domains & Routes** | `environment.domain.*`, `environment.route.*` |

| **Subscription** | `environment.subscription.update `|

| **Environment Settings** | `environment.update.http_access`, `smtp`, `restrict_robots` |

**Note**:

Note that development environments **always** use automatic deployment, while manual deployment is only available for staging and production environments.

###### Change deployment type

You can adjust deployment behavior in your environment (staging or production only).

The output should look similar to the example below:

```bash {}

Selected project: [my-project (ID)]

Selected environment: main (type: production)

Deployment type: manual

```

For more information about how this command works, use:

```bash {}

platform environment:deploy:type --help

```

To switch to manual, navigate to the environment settings in the Console and select the manual deployments option.

###### Trigger deployment manually

Once manual deployment is enabled, eligible changes are staged. You can deploy them in the following ways:

The output should look similar to the example below:

```bash {}

Deploying staged changes:

+---------------+---------------------------+-----------------------------------------------------------+---------+

| ID | Created | Description | Result |

+---------------+---------------------------+-----------------------------------------------------------+---------+

| 5uh3xwmkh5boq | 2024-11-22T14:01:10+00:00 | Patrick pushed to main | failure |

| fno2qiodq7e3c | 2024-11-22T13:06:18+00:00 | Arseni updated resource allocation on main | success |

| xzvcazrtoafeu | 2024-11-22T13:01:10+00:00 | Pilar added variable HELLO_WORLD to main | success |

| fq73u53ruwloq | 2024-11-22T12:06:17+00:00 | Pilar pushed to main | success |

+---------------+---------------------------+-----------------------------------------------------------+---------+

```

In the Console, a deploy button will be visible in the environment whenever changes are staged. Click this button to deploy your staged changes.Trigger the deployment of staged changes with the following:

```bash {}

POST /projects/{projectId}/environments/{environmentId}/deploy

```

**Note**:

As soon as your deployment type is switched from manual to automatic, all currently staged changes are deployed immediately and the environment resumes its default automatic deployment behavior.

##### Deployment philosophy

Platform.sh values consistency over availability, acknowledging that it's nearly impossible to have both.

During a deployment, the [deploy hook](https://docs.platform.sh/create-apps/hooks/hooks-comparison.md#deploy-hook) may make database changes

that are incompatible with the previous code version.

Having both old and new code running in parallel on different servers could therefore result in data loss.

Platform.sh believes that a minute of planned downtime for authenticated users is preferable to a risk of race conditions

resulting in data corruption, especially with a CDN continuing to serve anonymous traffic uninterrupted.

That brief downtime applies only to the environment changes are being pushed to.

Deployments to a staging or development branch have no impact on the production environment and cause no downtime.

##### What's next

* See how to [configure your app](https://docs.platform.sh/create-apps.md) for the entire process.

* Learn more about [using build and deploy hooks](https://docs.platform.sh/create-apps/hooks.md).

### Get support

Find out how to get help if you’re experiencing issues with Platform.sh.

##### Create a support ticket

If you're experiencing issues related to the proper functioning of the Platform.sh infrastructure, application container software, build processes, have found possible bugs, have general questions or wish to submit a feature request, open a support ticket:

1. [Open the Console](https://console.platform.sh/)

2. Click the **Help** dropdown in the upper right-hand corner.

3. Select **Support** from the options in the dropdown.

4. Click **+ New ticket**.

5. Fill in the ticket fields and click **Submit**.

Or use these shortcuts to [access all support tickets](https://console.platform.sh/-/users/~/tickets)

or [open a new ticket](https://console.platform.sh/-/users/~/tickets/open).

Once you submit a ticket, you see it in a list of all tickets created, for all projects you have access to, within your organization.

**Note**:

Note that once you submit the ticket, you can’t modify or delete the submission.

If you have any additional information, you can select the submitted ticket and write a message.

##### Discord

To talk about app development or framework-related questions,

join other customers and engineers in the [public Discord channel](https://chat.platform.sh/).

##### Community

The [Platform.sh Community site](https://community.platform.sh/) has how-to guides with suggestions

on how to get the most out of Platform.sh.

##### Contact Sales

If you have questions about pricing or need help figuring out if Platform.sh is the right solution for your team,

get in touch with [Sales](https://platform.sh/contact/).

##### Delete your account

To permanently delete your Platform.sh account, follow these steps:

**Warning**:

Deleting your Platform.sh account automatically deletes any linked Upsun, Ibexa Cloud, Pimcore PaaS, or Shopware PaaS accounts you may hold.

1. [Open the Console](https://console.platform.sh/).

2. Open the user menu (your name or profile picture) and select **My Profile**.

3. Click **Delete account**.

4. Check that the pre-filled information is correct and click **Submit**.

5. Read the consequences of account deletion and click **Submit request** to confirm.

Your request is now submitted and will be handled by Support shortly.

### Automate your code updates

Platform.sh allows you to update your dependencies through [source operations](https://docs.platform.sh/create-apps/source-operations.md).

##### Before you start

You need:

- The [Platform.sh CLI](https://docs.platform.sh/administration/cli.md)

- An [API token](https://docs.platform.sh/administration/cli/api-tokens.md#2-create-an-api-token)

##### 1. Define a source operation to update your dependencies

To facilitate updating your dependencies in your project,

define a source operation in your `.platform.app.yaml` file

depending on your dependency manager:

```yaml {}

source:

operations:

update:

command: |

set -e

composer update

git add composer.lock

git add -A

git diff-index --quiet HEAD || git commit --allow-empty -m "Update Composer dependencies"

```

.platform.app.yaml

```yaml {}

source:

operations:

update:

command: |

set -e

npm update

git add package.json package-lock.json

git add -A

git diff-index --quiet HEAD || git commit --allow-empty -m "Update npm dependencies"

```

.platform.app.yaml

```yaml {}

source:

operations:

update:

command: |

set -e

yarn upgrade

git add yarn.lock